Wednesday, December 27, 2006

Intentional Programming

So, it's been a while since I last posted anything here. It's been a busy few months and I'm just finding a little bit of time to catch up in the Christmas break. One of the major things that happened in the last couple of months was XPDay which we organised in London. The conference sold out (for the sixth year running) and thanks to everyone who came along and participated it was a great event.

One of the sessions that I enjoyed the most at the conference was Awesome Acceptance Testing presented by Dan North and Joe Walnes. Dan and Joe presented a number of different approaches to acceptance testing, and talked about what acceptance testing means for different people in the development process - often these are the same people wearing different hats at different times - customers, analysts, testers and developers. They distilled the subject to five key themes. To do acceptance testing effectively, you need: automation, a harness, a vocuabulary, a syntax, and to be capturing intent.

This last point seems very important, and in fact links together a number of different themes at the conference, and a number of different open source projects that are currently under development in the area of testing. We are trying to build tests that express their intent. The clearer they are about how the system is supposed to behave, the more use they are as a specification, and the easier it is for customers to validate them. How do we know that we are writing the right tests unless we can check them with the customer?

Also at XPDay, Tamara Petroff and I ran a workshop session on literate testing. The idea here was for customers and developers to work together to create tests for a given scenario that expressed their intention clearly enough that there could be a discussion between customer and developer focussed on the test. However, we provided a structure which meant that the resulting tests were of a form that could be readily automated, making them of direct use to the development team. Our literate testing work has resulted in the release of the LiFT framework which allows tests to be written in Java that read as natural language. At the moment the framework focusses on the web domain, but one of the things that we experimented with in the workshop was how tests for different domains might be written while maintaining the same overall structure.

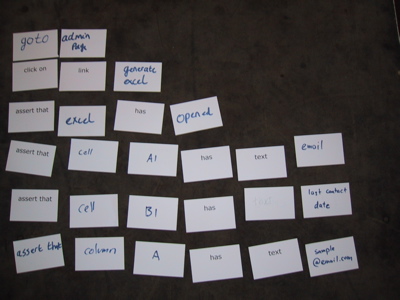

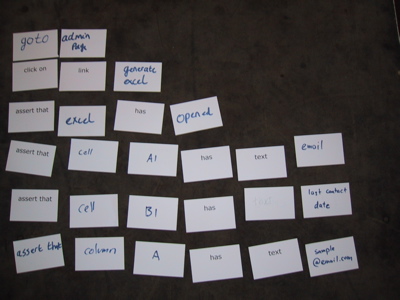

Groups worked together assembling tests usings decks of cards that we provided with various keywords printed on them. The also had some blank cards available to that they could extend the vocubulary when they needed to. This is important, as to express intent clearly, we need to be able to write our tests using the language of the domain that we are working in. If we are restricted to a fixed vocabulary by our test framework, this is very limiting, and often leads to tests being written that talk about the technology used to solve the problem (e.g. user interface widgets) rather than activity that the user is trying to carry out with the system.

One of our exercises involved writing acceptance tests for a report to be produced in the form of a spreadsheet. Most of the printed cards that we had provided were tailored to the web domain, so the teams had to produce more cards with words to describe the spreadsheet domain. From the results (in the picture above) we can see that the parts of the vocabulary to do with assertion could be reused from one domain to another, but the types of things present in each domain were different, and this is where new cards needed to be written. The pattern of printed and handwritten cards in columns struck me as highlighting the structure that is provided by the test framework. This helps to guide us towards creating tests that are automatable, but at the same time giving us the freedom in vocubulary to express our intent.

While we are discussing this topic, there are a couple of other projects worth mentioning. The

One of the reasons for the release of Hamcrest was the upcoming (sometime real soon now, apparently) release of an RC1 version of jMock2. Having used jMock2 for a couple of weeks now, there are a few things that make it feel so much better than the original jMock. They're difficult to describe, but it's now much easier to write your test first and use your IDE to reactively complete/generate the code you need to make the test pass. jMock2 also uses Hamcrest, and focuses on providing a syntax that makes tests easy to read.

I can see intentional programming becoming a theme for 2007 (although I probably don't mean exactly the same thing that Charles Simonyi does).

One of the sessions that I enjoyed the most at the conference was Awesome Acceptance Testing presented by Dan North and Joe Walnes. Dan and Joe presented a number of different approaches to acceptance testing, and talked about what acceptance testing means for different people in the development process - often these are the same people wearing different hats at different times - customers, analysts, testers and developers. They distilled the subject to five key themes. To do acceptance testing effectively, you need: automation, a harness, a vocuabulary, a syntax, and to be capturing intent.

This last point seems very important, and in fact links together a number of different themes at the conference, and a number of different open source projects that are currently under development in the area of testing. We are trying to build tests that express their intent. The clearer they are about how the system is supposed to behave, the more use they are as a specification, and the easier it is for customers to validate them. How do we know that we are writing the right tests unless we can check them with the customer?

Also at XPDay, Tamara Petroff and I ran a workshop session on literate testing. The idea here was for customers and developers to work together to create tests for a given scenario that expressed their intention clearly enough that there could be a discussion between customer and developer focussed on the test. However, we provided a structure which meant that the resulting tests were of a form that could be readily automated, making them of direct use to the development team. Our literate testing work has resulted in the release of the LiFT framework which allows tests to be written in Java that read as natural language. At the moment the framework focusses on the web domain, but one of the things that we experimented with in the workshop was how tests for different domains might be written while maintaining the same overall structure.

Groups worked together assembling tests usings decks of cards that we provided with various keywords printed on them. The also had some blank cards available to that they could extend the vocubulary when they needed to. This is important, as to express intent clearly, we need to be able to write our tests using the language of the domain that we are working in. If we are restricted to a fixed vocabulary by our test framework, this is very limiting, and often leads to tests being written that talk about the technology used to solve the problem (e.g. user interface widgets) rather than activity that the user is trying to carry out with the system.

One of our exercises involved writing acceptance tests for a report to be produced in the form of a spreadsheet. Most of the printed cards that we had provided were tailored to the web domain, so the teams had to produce more cards with words to describe the spreadsheet domain. From the results (in the picture above) we can see that the parts of the vocabulary to do with assertion could be reused from one domain to another, but the types of things present in each domain were different, and this is where new cards needed to be written. The pattern of printed and handwritten cards in columns struck me as highlighting the structure that is provided by the test framework. This helps to guide us towards creating tests that are automatable, but at the same time giving us the freedom in vocubulary to express our intent.

While we are discussing this topic, there are a couple of other projects worth mentioning. The

assertThat syntax that we use in LiFT originally came from Joe Walnes, and was built into jMock. Not the matching/constraints part of jMock has been extracted into its own extensible library, Hamcrest. This has recently seen its 1.0 release and is well worth checking out.One of the reasons for the release of Hamcrest was the upcoming (sometime real soon now, apparently) release of an RC1 version of jMock2. Having used jMock2 for a couple of weeks now, there are a few things that make it feel so much better than the original jMock. They're difficult to describe, but it's now much easier to write your test first and use your IDE to reactively complete/generate the code you need to make the test pass. jMock2 also uses Hamcrest, and focuses on providing a syntax that makes tests easy to read.

I can see intentional programming becoming a theme for 2007 (although I probably don't mean exactly the same thing that Charles Simonyi does).